TLDR: QUIC and Bolina are new UDP-based transport protocols that aim to deliver a low-latency, reliable, secure and fast connection between end-hosts. In this post I compare these two protocols that are (re-)designed for a faster web to understand how different they are from TCP and how exactly they improve network performance.

QUIC is here!

I’m sure you’ve already heard about QUIC. QUIC was born to answer the need for faster, easily deployable and evolvable transport protocols, providing the most relevant facilities of TCP (reliability, in-order delivery), TLS (security) and HTTP/2 (multiplexing).

Living in a wireless world, it is of the utmost importance that transport protocols deal properly in scenarios where losses may occur frequently. Looking into QUIC’s proposal, we see great improvements to connection establishment (0-RTT, transparent connection migration) but, in what concerns loss detection/recovery and congestion control, these improvements seem rather incremental - at least at first sight.

QUIC definitely simplifies the process of loss detection. However, it does not revisit the processes of loss recovery and congestion control, settling on suggesting the use of some TCP-based congestion control. Knowing that most TCP versions are clearly inefficient operating over volatile networks (e.g. wireless, mobile), this seems rather limiting.

A different approach to unpredictable networks

That was the main reason why we built Bolina: we wanted a transport protocol that may be able to adapt to unpredictable network environments, as the case of modern 4G or Wi-Fi links. Bolina also supports 0-RTT and connection migration, but that was not enough to get the kind of performance we wanted. We needed to be super efficient in the presence of packet loss, latency and jitter.

We had a “simple” question at hand: what are the issues that prevent transport protocols from performing well in networks with highly dynamic conditions? Biased by our coding background, it all boiled down to the following for us:

- ARQ-based strategies are inefficient in the presence of packet losses:

- They require feedback to learn that packets have been lost in order to retransmit those packets, which leads to significant impairments due to latency (minimized by SACK and fast retransmit mechanisms).

- They depend a lot on detecting precisely which packets are lost (hard to do in networks with a lot of delayed/out-of-order packets), otherwise they’ll send redundant information.

- Deployed congestion control mechanisms do not reflect the evolution of networking technologies:

- packet loss is not only due to congestion anymore (lots of packets get lost when communicating over wireless or mobile networks).

- a lot more links with large bandwidths which may lead to inefficient use of the available bandwidth, especially in networks with large BDP.

How can we get rid of these limiting factors? Can we circumvent these design limitations? The short answer is “Yes, by using awesome coding schemes”!

TCP, QUIC and Bolina

QUIC is inspired by TCP (QUIC suggests the use of NewReno or Cubic as congestion control mechanisms) but has some very relevant differences, namely in terms of packet sequencing and loss detection. Nonetheless, the underlying transmission principle is the same: ARQ.

Bolina departs from these ARQ-type of loss recovery strategies and uses a technique called network coding, where losses are repaired using coded packets that allow the recovery of the original data, irrespective of the lost packet.

Let’s put side-by-side the strategies used by these protocols.

| Protocol | Packet numbering | Acknowledgments | Loss Detection | Loss Recovery |

| TCP | increasing packet numbers (retransmissions keep packet number) | used for loss detection (SACK) | three duplicate ACKs or timeout | retransmissions |

| QUIC | increasing packet numbers (retransmissions have new packet number) | used for loss detection (SACK/NACK with more holes) | packet threshold or timeout | retransmissions |

| Bolina | increasing packet numbers (no retransmissions) | used for link quality assessment (effective goodput) | degrees of freedom or timeouts | coded packets (new info every packet) |

From this table, we can see the big difference between QUIC and Bolina: while QUIC largely depends on feedback for all operations (loss detection, loss recovery and implicitly transmission of new data), Bolina needs feedback only to estimate link quality and receiver state (no need for loss detection, packet retransmissions or waiting for feedbacks to transmit new data).

While these differences by themselves allow to eliminate some of the limitations of TCP-like transport protocols (the ones related to ARQ), what we do with the information being carried over the feedback is also of extreme relevance. For instance, it’s that information that allows Bolina to distinguish transmission errors from congestion related losses.

Curious about the impact on performance? We’ve benchmarked it for you.

A first performance benchmark

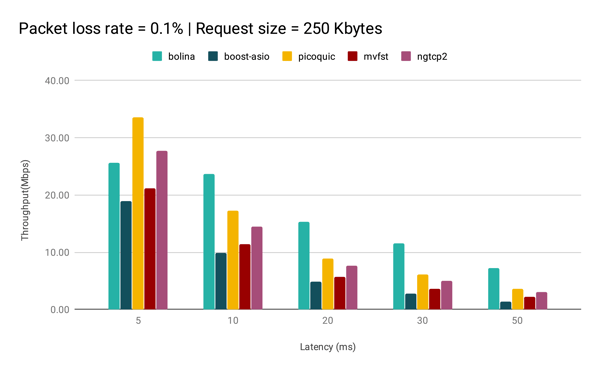

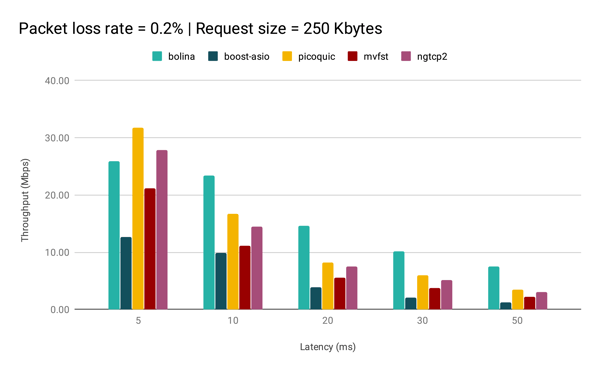

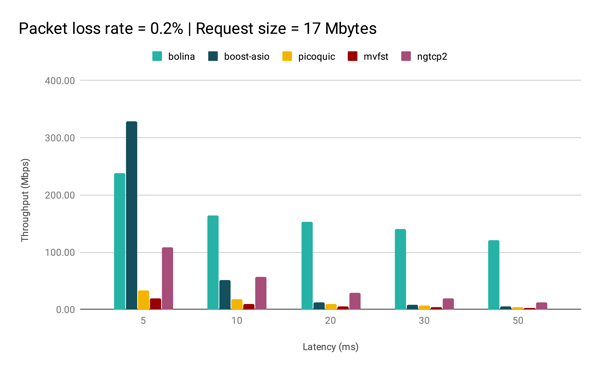

We set out to test some of the current QUIC implementations (ngtcp2, picoquic and mvfst through proxygen) against TCP (using boost-asio) and Bolina in settings where packet loss is present (you can check the details of the benchmark in the end of this post). The test was very simple: 25 sequential HTTP GET requests of a given amount of bytes for specific latency and packet loss points. All QUIC implementations had 0-RTT enabled (the first request for each latency/packet loss point was 1-RTT and the remaining 0-RTT). Here's what we got:

We can see that QUIC clearly improves over TCP for all packet loss and latency points when we request around 250KBytes of data. Great, this is what we expected. Now let’s compare QUIC with Bolina. We can see that QUIC performs better than Bolina for the lowest latency point (5ms). But for all remaining latencies, Bolina outperforms QUIC. We will shortly analyze the reasons for this. But first let’s see how all protocols behave when the request is larger.

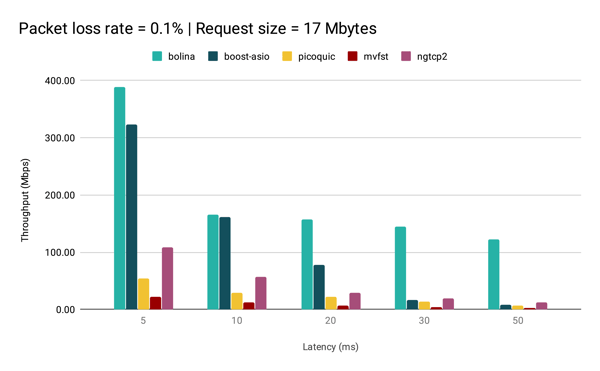

Things changed a bit! Now some QUIC implementations do not outperform TCP. In fact, they only achieve the performance of TCP when latency increases. Bolina, on the other hand, outperformed TCP and QUIC for all latency regions except the lowest latency point. What happened? We only changed the size of the request.

Things changed a bit! Now some QUIC implementations do not outperform TCP. In fact, they only achieve the performance of TCP when latency increases. Bolina, on the other hand, outperformed TCP and QUIC for all latency regions except the lowest latency point. What happened? We only changed the size of the request.

Performance: a primer (or why congestion control matters)

If you think about the improvements of the connection phase, they are very relevant when the connection is over within a few round trip times (i.e. for very short connections). 0-RTT connection resumption removes 2 RTTs from the connection lifetime. This means that if the request would be served within 6 RTTs, now it would take only 4 RTTs (a decrease in completion time of 33.3%). This is an awesome improvement. But if the connection lifetime is a little bit longer, say 50 RTTs, then the connection would be finished with 48 RTT’s (a decrease in completion time of 4%). Still good, but not so noticeable.

This means that 0-RTT is a great feature, but only gives great benefit when serving smaller requests. In fact, the congestion control of the transport protocol is almost “unused” in these cases (most of the time is spent on the slow start phase and we never reach the channel limits). For larger requests, performance is actually dominated by the transport stage, which in turn is dictated by the congestion control algorithm and loss detection/recovery mechanisms. The same holds if we are in the presence of packet loss, since packets will be lost and we will need a few more round trips to complete the requests.

Where Bolina shines

And here is where Bolina shines. Built on top of network coding technology, Bolina offers a completely different perspective on transport protocols where loss is no longer king. We got rid of strict dependency on acknowledgments (we still need them but, unlike other transport protocols, not to know what to transmit next) and we got packet recovery “for free” (any packet can be used to recover the original data stream).

As a consequence, instead of thinking about losses of individual packets as signals of congestion, we may look at how much information is passing through the channel. If losses do not impact in the effective goodput of the link, Bolina will not assume that the network is congested. You shouldn’t be tricked into thinking that Bolina only uses goodput to perform congestion control: it uses RTT and jitter signals to help detect congestion. After all, we do not want latency to skyrocket!

Here I discuss in detail the different strategies used by TCP, QUIC and Bolina to deal with network events, and how these design choices can impact wireless performance. If you're ready to try Bolina yourself, go ahead!

Code and benchmark details

I started this blogpost with the intent of analyzing the performance of QUIC over channels with latency and packet loss. To do this, we’ve chosen three QUIC implementations: (ngtcp2, picoquic and mvfst through proxygen). We tested draft-20. All of them contain simple implementations of a QUIC client and QUIC server that we used to measure throughput.

You shouldn’t assume that these implementations are production-ready, as the QUIC working group is still building QUIC. This is relevant in the sense that they might contain bugs or pieces of underperforming code. If you want to test QUIC by yourself, we have a project on GitHub that you can access here.

Our initial goal was to test these QUIC libraries in a mobile environment, but none of them has mobile support. Thus, we had to perform the tests in a desktop environment, which in turn took us to build a Bolina Client for a desktop environment solely for the purpose of this test.

Bolina Client is publicly available for Android and iOS and we’re working on building a QUIC SDK for mobile environment as well. In the meantime, in case you want to replicate the above results, please contact us to get the necessary Bolina Client binaries for Desktop. In case you are curious about Bolina's application, we have two use-cases (video streaming and social networks) available here.

All tests were performed using HTTPS with Let’sEncrypt certificates generated through certbot.

With respect to the client and server machines, these had the following specs:

- 4 vCPUs

- 8GB RAM

They were connected through a submillisecond link (~0.5ms) with 2Gbps bandwidth.

To induce latency and packet loss, we used a tc based emulator, you can find it here.