TLDR: QUIC focus on handshake optimization (which is great), brings at least twice as fast connection establishment and dramatically reduces the impact of handover between different networks. However, with incremental impact. Why? It fails to truly revisit transport protocols at their essence: it is still highly dependent on acknowledgments - and that does not suits the performance challenges of a wireless world.

UPDATE: Check our most recent performance analysis on QUIC.

You have probably heard about QUIC. Even if you haven’t, it’s highly likely that you have already used it: Google services on Chrome have been using QUIC for a few years now. QUIC standardization process is also very close to being completed. QUIC is a valuable Google initiative for a new internet transport protocol, with the ultimate goal of reducing latency. Does QUIC achieve this goal? It does, but just with incremental impact.

QUIC focus on handshake optimization (very important!) but fails to truly revisit transport protocols at their essence (the transport!). Why is this important? Because traditional transport techniques have been defined in a wired-users world. That world is long gone, with unreliable and unpredictable wireless links dominating user experience. Are there other options? Yes: erasure coding, instead of ARQ.

A single handshake, instead of 2

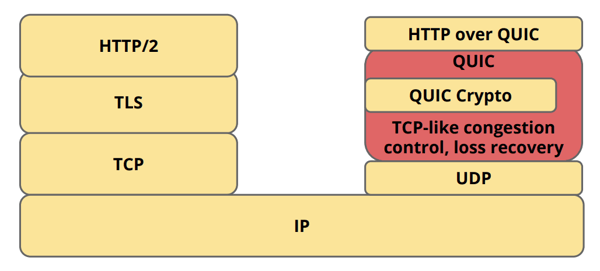

In a nutshell, QUIC replaces the combination of TCP and TLS, taking a cross-layer approach to transport and security. Underneath QUIC, UDP is used as “transport”. Why UDP? A very practical decision: using UDP enables very fast deployability at user space, whereas modifying TCP would take ages to be adopted (more on network protocols here).

Image source

For a deeper understanding of QUIC, I recommend taking a look at Chromium Projects (QUIC at 10,000 feet is an excellent starting point). I also recommend the extraordinary talk “QUIC: Replacing TCP for the Web”, by Jana Iyengar (Fastly, ex-Google). Overall, the major benefits attributed to QUIC are:

- Connection establishment latency

- Definitely the case! QUIC achieves 0-RTT for known servers.

- Improved congestion control

- Marginal modifications. It’s not clear what’s going to be the final option, but seems that it will be based on TCP NewReno

- Multiplexing without head-of-line blocking

- QUIC does decrease the problem of head-of-line blocking. But not entirely.

- Forward error correction:

- No practical impact, at least for now.

- Connection migration:

- Very valuable feature: moving from WiFi to LTE without renegotiating the session!

In the end, QUIC does bring (at least) twice as fast connection establishment and reduces dramatically the impact of handover between different networks. Very important features, but incremental impact. I’ll extend a bit further my thoughts on each of these main benefits.

Connection establishment latency

In standard HTTP+TLS+TCP, TCP needs a handshake to establish a session between server and client, and TLS needs its own handshake to ensure that the session is secured. QUIC only needs a single handshake to establish a secure session. As simple as that, you get the connection establishment time cut in half.

Image Source

How? Simplifying (a lot!), the client sends a connection ID to the server, which then returns to the client a token and the server’s public Diffie-Hellman values, which allows server and client to agree on an initial key. Client and server can start immediately exchanging data, while at the same time they will establish a final session key. To learn more on QUIC’s security handshake, I recommend a very clear presentation by Robert Lychev (video, slides).

Moreover, similar to TLS 1.3, after the server and client “meet” for the first time, they cache session keys and, by the time of a new request, no handshake is necessary. This is the so-called 0-RTT feature.

Improved congestion control

QUIC introduces a new sequence numbering mechanism. Every packet has a new sequence number, including retransmission packets, which enables for a more accurate round-trip-time (RTT) calculation. However, QUIC’s congestion control is a traditional, TCP-like, mechanism. Seems that the most recent option is NewReno, but you can find references for the usage of CUBIC or BBR. As we know from TCP, all have limitations, and it becomes a trade-off problem to choose one.

Like TCP, QUIC is at its essence an ARQ protocol, i.e. feedbacks are required to recover from packet losses. And this design choice then leads to inefficiencies when evaluating link conditions. And, in links where latency and losses are unstable, these limitations lead to a significant performance loss. Yes, I’m talking about wireless links, which are expected to support more than 63% of total internet traffic by 2021.

There is a much more efficient way to handle losses: erasure codes. More on that later.

Multiplexing

Multiplexing is very important because it prevents head-of-line (HOL) blocking. HOL blocking is what happens when you request multiple objects, and a small object gets stuck because a preceding large object got delayed. By using multiple streams, lost packets carrying data for an individual stream only impact that specific stream. Therefore, QUIC does significantly decrease HOL blocking, but not entirely.

Also, allowing the developer to actually choose what are the most important requests, based on characteristics other than their size, it could be tremendously helpful in improving the overall user experience.

Forward error correction

I’ve previously mentioned erasure codes as a more clever way to handle packet loss, and QUIC does indeed consider the potential use of Forward Error Correction (FEC) techniques. However, the experiments of FEC usage in QUIC were (not) surprisingly demotivating. The approach was to use XOR-based FEC, that can only recover a single packet. This means that if two or more packets are lost, the FEC packet becomes useless. Overhead then impairs speed and, in the end, support for XOR-based FEC was removed from QUIC in early 2016.

This is actually not surprising. Traditional FEC is a purely proactive loss recovery scheme, which means that the server will send more packets than necessary (decreasing goodput) or less than necessary (not decreasing delay), achieving optimality very rarely. Traditional FEC has the problem of not adapting to fluctuating channel characteristics. Unreliable, unpredictable links are the problem. Yep, wireless.

A recent IETF draft mentions the use of FEC to improve QUIC performance with real-time sessions, arguing that FEC makes packet loss recovery insensitive to the round trip time. This is a promising evolution! Still too early to assess how that will behave out in the wild, but promising nevertheless.

Connection migration

Not much to say here. QUIC brings its own unique identifier for a connection, the Connection UUID, which makes it possible to handover networks and keep the same Connection UUID. So handover is not a problem anymore (except for the long handover times, but that’s not something a transport protocol can solve).

The downside of QUIC

Performance

Google claims that QUIC reduced the latency of Google Search responses by 3.6% and YouTube video buffering by 15.3% (which, although small, is still an interesting improvement, given that speed truly matters). In the aforementioned talk by Jana Iyengar, we see these numbers in greater detail:

| Reduction in Search Latency | % Reduction in Rebuffer Rate | |||

| Country | Desktop | Mobile | Desktop | Mobile |

| South Korea | 1.3% | 1.1% | 0.0% | 10.1% |

| USA | 3.4% | 2.0% | 4.1% | 12.9% |

| India | 13.2% | 5.5% | 22.1% | 20.2% |

These numbers are consistent with the findings reported in the 2016 paper “How quick is QUIC?”, where the authors compare QUIC with SPDY and HTTP. The results show that QUIC performs well under high latency conditions, in particular for low bandwidth, which is in line with the performance results reported in India (above). The authors also show that QUIC is the best option when we are talking about small objects.

At the same time, the results show that QUIC does not perform well for large amounts of data in very high bandwidth networks. Most surprisingly, the authors claim that, when comparing QUIC, SPDY and HTTP, “none of these protocols is clearly better than the other two and the actual network conditions determine which protocol performs the best.”

UDP throttling

QUIC works on top of UDP, thus it will only work when a UDP link is available between client and server. Otherwise, if UDP is not available, QUIC fallbacks to standard HTTP, ensuring that the end user still gets the desired content. But what happens when facing UDP throttling, for example in an enterprise or public network? Since UDP passes through, QUIC is capable of establishing a connection. However, due to the UDP throttling, resorting to TCP would ensure a much higher speed!

Another example of UDP throttling can be found in QoS rules in some home routers, which can lead to Google sites loading very slowly in Chrome.

The solution? Manually disable QUIC, contact the operators and ask the operators to stop throttling, according to a 2017 paper by Google. Doesn’t sound much practical. A UDP throttling detection mechanism would be of much greater assistant, since it could trigger an automatic fallback to TCP, ensuring that the end user has the best experience possible.

Usability

QUIC is still in the standardization process. It is still ongoing work, naturally. Therefore, using QUIC at this stage still requires quite a significant amount of effort. Using QUIC in a mobile app requires:

- Setup QUIC server(s) and deploy globally per end user distribution.

- Add QUIC client code to the app

- Since no official Mobile SDK is available, developers must either build their own or rely on third-party frameworks

- Support multiple OS (iOS, Android, Windows)

- Enable HTTP fallback since some assets might not benefit from QUIC and need HTTP

- Some networks block QUIC to avoid service interruption

- Finding out how to handle UDP throttling

However, with the efforts we are seeing from Google, Facebook, Cloudflare, Fastly and Uber (among many others), standardization is on the way and I’m sure the complexity of using QUIC will decay very fast.

QUIC: ARQ, again. How about erasure codes?

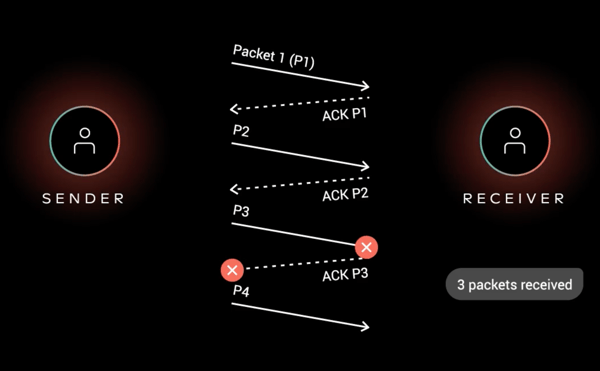

I’ve already mentioned a few times that QUIC is, in its essence, an ARQ-based protocol. ARQ protocols fundamentally rely on feedback information to recover from packet loss. Simplifying, the sender expects the receiver to individually acknowledge the reception of each packet and does not move forward until this acknowledgment arrives. In case a loss is detected, the sender then retransmits the lost packet, ensuring reliability. A simple example:

The problem with this approach is that it is highly impaired by the RTT of the link, since the sender needs to wait for the acknowledgment from the receiver. In other words, although QUIC efficiently reduces the connection establishment time, QUIC is highly impaired by latency in the actual transport of the data, as with any TCP session!

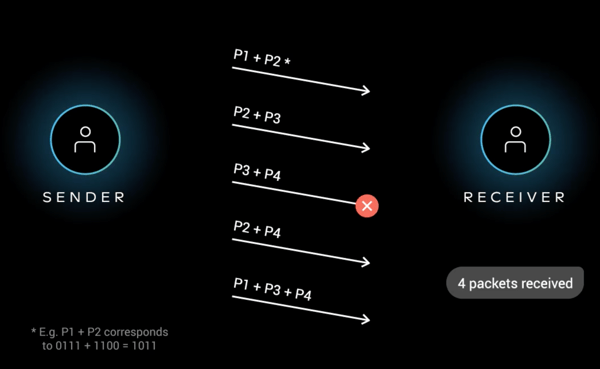

So, how to overcome losses without the need for acknowledgments? With erasure codes! For those not familiarized, it basically consists of mixing original packets and using linear algebra to ensure that, no matter what n transmissions get to the receiver, the receiver is capable of recovering all the n original packets. The following simple example will help:

The sender does not need to wait for any acknowledgment before sending a new (coded) packet, while the receiver recovers all the 4 original packets. And we are not sending more data or larger packets! For clarity, P1+P2 = 0101 + 1110 = 1011, i.e. a coded packet has the same size of an original packet. (This post by Steinwurf provides spectacular animations to illustrate the benefits of erasure codes over ARQ. Take a look!)

The sender does not need to wait for any acknowledgment before sending a new (coded) packet, while the receiver recovers all the 4 original packets. And we are not sending more data or larger packets! For clarity, P1+P2 = 0101 + 1110 = 1011, i.e. a coded packet has the same size of an original packet. (This post by Steinwurf provides spectacular animations to illustrate the benefits of erasure codes over ARQ. Take a look!)

Given that the sender does not rely on acknowledgments for ensuring the reliable delivery of all packets, this approach is tremendously more robust to latency or latency variation (jitter). Naturally, it is also much more robust to packet loss. And where do we see latency, jitter and packet loss? In wireless networks!

Breaking up with ARQ

You might be wondering “But why hasn’t FEC helped QUIC?”. The answer is “simple”: because, although QUIC does foresee the use of FEC, it still is, in its essence, highly dependent on acknowledgments. Moreover, as I mentioned above, not every kind of erasure codes are suited for scenarios where losses are unstable and unpredictable.

Therefore, we must choose a coding technique that is capable of quickly adapting to fluctuating channel conditions, making sure no packet transmission is wasted. Also, it should be able to learn with usage and automatic improve its coding and transmission decisions. And that’s precisely what we have done in our transport protocol, Bolina. Its secret sauce is a special kind of erasure codes called network codes, which we tailored for volatile links and low latency requirements. And we got something like this: