Kubernetes is one of the more prominent tools bridging the gap between cloud-native and cloud-agnostic development. Acting as what can be described as an operating system for the cloud, Kubernetes defines and manages a set of resources, which serve as abstractions for the deployment, scaling, and management of cloud infrastructure and applications. Through this layer of abstraction, developers can effortlessly move or replicate workloads and infrastructure across different cloud providers and on-premise locations.

But as with all abstraction layers, and following The Law of Leaky Abstractions, there ain’t no such thing as a free lunch. Sprinkling Kubernetes into your technological mix also adds a new layer of complexity and introduces new challenges to your development team — my colleague Miguel describes one such challenge in great depth in his latest multi-series blog post. Managing the definition and configuration of Kubernetes resources is no trivial task, and like most teams first starting out, we implemented our own in-house tooling to manage the configuration and deployment of our clusters. However, we came to the realization that we bit off more than we could chew. Here are the primary issues we found that contributed to configuration sprawl, and made configuring our clusters and their services intractable:

- Configuration drift due to not accounting for all possible environments and their volatility. Initially, we predicted that there would be four distinct environments: development, staging, QA, and production. In reality, environments are highly dynamic and depend on current business requirements and the organization’s structure. We have had at least 15 distinct environments running at a given point! Most of them being development environments. As our processes did not account for all these environments, most of them would get lost in separate branches — which is NOT how you want to manage environments. It became hard to keep track of changes and to replicate them across other environments. Here is a hot tip to get a grip on how many environments you have: There are usually more environments than there are clusters, as each cluster contains at least one environment. If you believe you have fewer environments than you have clusters running, give it a moment’s thought and consider under which scenarios you may need to deviate a cluster’s deployment from its common environment, and how that affects your internal processes.

- Lack of distinction between environment-specific configuration and application level configuration and settings. Configuration files got bloated with irrelevant fields that never changed throughout all environments — these fields used to be relevant or were originally included as part of an application’s configurable parameters — and were replicated across all environments. This compounding noise makes tracking differences between environments and ensuring all past and future environments are covered by configuration changes increasingly harder. It became common to accidentally break environments because of structural configuration changes or even simple version mismatching. As a direct result, processes slowly ground to a halt and productivity decreased. Exacerbating these effects, some of our services would get caught in the blast radius of ill-configured environments.

Rethinking our approach

We have decided to bite the bullet and start off again on a clean slate. To promote hygiene and maintainability, we want to start regarding the configuration of our clusters as part of the codebase. To offset for added complexity, we can take advantage of this fresh start to clean up the configuration and remove irrelevant fields into hard-coded values — The Configuration Complexity Clock offers an interesting perspective on the different stages of complexity your configuration management can reach.

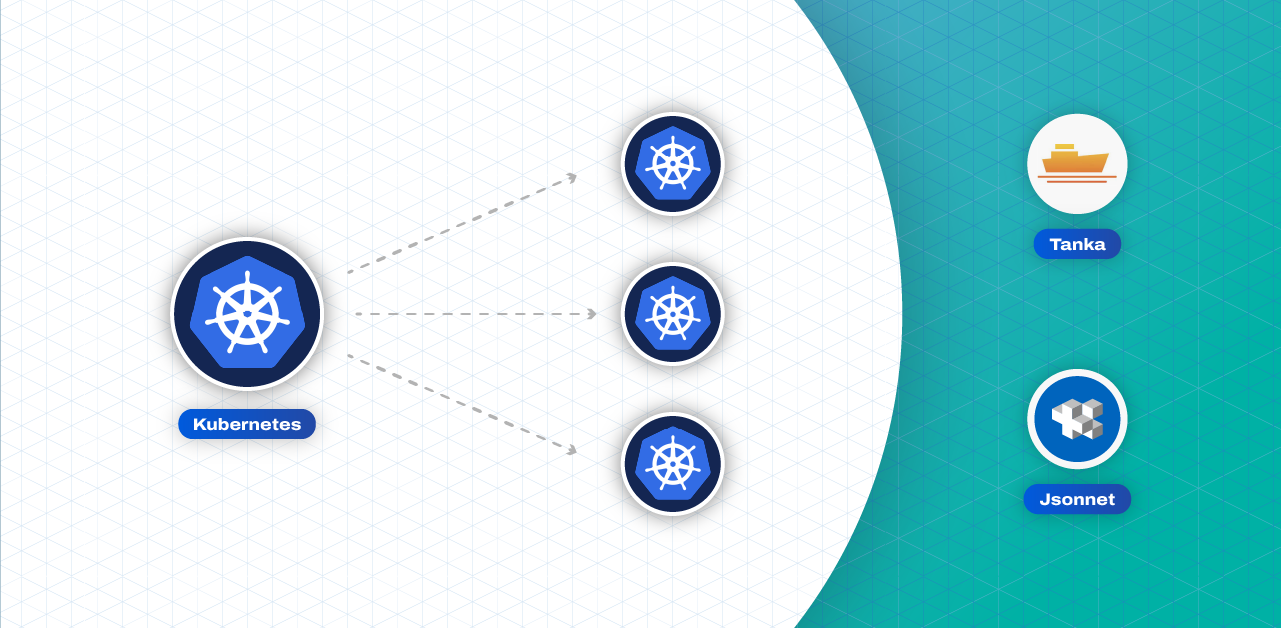

When designing our solution, we want to find the right balance for our use-cases between simplicity, agility, flexibility, and expressivity. Considering the operation of our business as a CDN provider — where our product is deployed in multiple locations while new infrastructure, features, and improvements are being developed and validated in development and staging environments — we expect to have a large number of clusters running, and it should be easy to navigate the differences in configuration between environments and to apply them to other environments. It should be easy to understand which features and new settings are being used in a staging environment undergoing validation, and it should be straightforward to apply these to one or more production environments after validation. To achieve this goal, we want to be able to aggregate and encapsulate the configuration of a certain feature or component into a single declarative unit, a configuration variant. These configuration variants should be mostly independent from each other, produce no side effects and always evaluate to the same output (referential transparency). With this abstraction, we can conceptualize a configuration model based on feature declarations rather than resource declarations, where environment declarations can be defined as a set of composable and pluggable configuration variants.

Following this model, the difference in configuration between two environments A and B is the set of variants that are present in A but not in B, and the set of variants that are present in B but not in A. Applying features and new settings from one environment to another consists of adding the related configuration variants to the desired environment. Changes in configuration are made inside the variants, and environments using those variants automatically use the new changes — if your context requires some environments to not use the new changes, then you should split the variants or add a new one, an approach that highlights the distinction between environments using and not using the changes. To implement such an ambitious workflow, we need some top-shelf tooling to match.

Selecting the right tools for the job

The age-old struggle of configuration management has also stricken the Kubernetes community. Despite offering a declarative model for configuring resources with human-readable YAML, it leaves out multiple related aspects such as organizing and managing divergent configuration elements, prompting the community to develop their own solutions on top. Of these solutions, the following two are the most prominent among the community:

- Helm is a package manager for Kubernetes with templating capabilities. With Helm, you can create and install packages — known as charts — which bundle a set of Kubernetes resources. While data templating is not its primary focus, charts may also contain templates that are instantiated with default or user-provided values through a

values.yamlfile. Since any desired variation in configuration has to be expressed by providing different values, it becomes harder to express more complex variations requiring different Kubernetes resources or implicating multiple charts. Since charts cannot extend the configuration of other charts, we cannot leverage them to scope features, improvements, or other configuration variants. - Kustomize is a native configuration manager, built into

kubectl, which allows for Kubernetes configurations to be customized by declaring overlays — a set of patches applied on top of a set of bases, which may themselves be another overlay or a set of resources. With the provided level of customization, overlays can be used to envelop a set of patches pertaining to a certain feature or improvement, however, you are restricted to a single inheritance tree to map your environment definitions. To combat this issue, it became possible to compose overlays through Components, offering a complement and alternative to extending overlays.

Kustomize was one of our initial candidates for our configuration overhaul, but when delving deeper into the search for configuration management tools, Jsonnet, a superset of JSON for data templating, propped up as a more attractive candidate. Jsonnet’s design article describes its foundations on functional principles, aligning with the declarative and composable nature of our proposed model. For instance, here are a couple of design features that caught our attention:

- The hermeticity principle — where Jsonnet code is guaranteed to generate the same JSON, regardless of the system environment — which aligns with our approach of modeling variants as pure computation elements;

- Prototype-based object inheritance with mixin support — Jsonnet also borrows object-oriented programming semantics. By declaring configuration variants as mixins, we can leverage the inheritance operator to compose multiple variants.

Combining both of these properties, we can implement, using a very simple syntax, environment definitions composed of pluggable configuration variants. Since Kubernetes resources are declared using YAML, they can be easily mapped from JSON that is generated by Jsonnet. To simplify the integration of Jsonnet with the management of the configuration of Kubernetes clusters, we use Tanka. To help illustrate the advantages of our declarative, feature-oriented configuration model, we have a small, simple example showcasing how you can Jsonnet and Tanka to manage multiple clusters.

Managing multiple clusters with Jsonnet and Tanka

When creating a Tanka project, you will find that it is structured around three main directories:

environments/containing a set of environment declarations. With this declaration, Tanka can be used to apply configuration for an environment to a Kubernetes cluster. An environment declaration consists of two files:

>main.jsonnet, the entry point of our configuration, which we use to declare the set of configuration variants that are part of the environment;

>spec.json, an environment configuration file consumed by Tanka that, for instance, specifies in which cluster the environment should be applied;lib/containing the local Jsonnet libraries that are used by environment declarations. This is where we implement our configuration variants;vendor/containing external Jsonnet libraries managed by Jsonnet Bundler.

Let’s go back to our initial scenario with 15 distinct environments running, and reduce it into a more simplified one, with 3 production environments, and 1 QA environment. All four environments are running a deployment with a bolina server — which can be declared as below:

apiVersion: apps/v1

kind: Deployment

metadata:

name: bolina

namespace: default

labels:

app.kubernetes.io/name: 'bolina'

app.kubernetes.io/component: 'bolina'

app.kubernetes.io/part-of: 'codavel-cdn'

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: 'bolina'

app.kubernetes.io/component: 'bolina'

app.kubernetes.io/part-of: 'codavel-cdn'

template:

metadata:

labels:

app.kubernetes.io/name: 'bolina'

app.kubernetes.io/component: 'bolina'

app.kubernetes.io/part-of: 'codavel-cdn'

spec:

containers:

- name: bolina

image: bolina-server

ports:

- name: bolina-tcp

containerPort: 9001

protocol: TCP

- name: bolina-udp

containerPort: 9002

protocol: UDP

With Jsonnet, we can declare this same resource like below — we use hidden fields to separate the container declaration from the deployment, and to more easily modify values that tend to be changed by other variants:

{

local values = $.values.bolina,

values+:: {

bolina: {

name: 'bolina',

namespace: 'default',

labels: {

'app.kubernetes.io/name': values.name,

'app.kubernetes.io/component': 'bolina',

'app.kubernetes.io/part-of': 'codavel-cdn',

},

image: 'bolina-server',

},

},

bolina: {

container:: {

name: values.name,

image: values.image,

ports: [

{ name: values.name + '-tcp', containerPort: 9001, protocol: 'TCP' },

{ name: values.name + '-udp', containerPort: 9002, protocol: 'UDP' },

],

},

deployment: {

apiVersion: 'apps/v1',

kind: 'Deployment',

metadata: {

name: values.name,

namespace: values.namespace,

labels: values.labels

},

spec: {

replicas: 1,

selector: {

matchLabels: values.labels

},

template: {

metadata: {

labels: values.labels,

},

spec: {

containers: [ $.bolina.container ],

},

},

},

},

},

}

Each of the four clusters is using a different cloud provider, and therefore requires a specific implementation of the Cloud Controller Manager (CCM). The QA cluster is also testing a new feature — a system that automates the renewal of certificates in bolina servers — which is not present in the production environments.

Directory structure

Here is the directory structure of a Tanka project for this scenario — we have created a codavel-cdn module with a set of configuration variants, and a set of mandatory base components that are imported in main.libsonnet.

├── environments │ ├── prod-mumbai-gcp │ │ ├── main.jsonnet │ │ └── spec.json │ ├── prod-hyderabad-oci │ │ ├── main.jsonnet │ │ └── spec.json │ ├── prod-bangalore-do │ │ ├── main.jsonnet │ │ └── spec.json │ └── qa │ ├── main.jsonnet │ └── spec.json ├── lib │ ├── codavel-cdn │ │ ├── variants │ │ │ ├── ccm │ │ │ │ ├── digital-ocean.libsonnet │ │ │ │ ├── google.libsonnet │ │ │ │ └── oracle.libsonnet │ │ │ └── certificate-renewal.libsonnet │ │ ├── components │ │ │ └── bolina.libsonnet │ │ └── main.libsonnet │ └── utils.libsonnet └── vendor

Environment declarations

Following this structure, we can declare the environments like below. Judging from the declarations alone, it is clear which cloud provider is being used in each environment, and that the QA environment has an extra feature enabled. Adding the certificate renewal feature to a production environment is as simple as adding the line declaring that variant.

(import 'codavel-cdn/main.libsonnet') + (import 'codavel-cdn/variants/ccm/google.libsonnet')

(import 'codavel-cdn/main.libsonnet') + (import 'codavel-cdn/variants/ccm/oracle.libsonnet')

(import 'codavel-cdn/main.libsonnet') + (import 'codavel-cdn/variants/ccm/digital-ocean.libsonnet')

(import 'codavel-cdn/main.libsonnet') + (import 'codavel-cdn/variants/ccm/digital-ocean.libsonnet') + (import 'codavel-cdn/variants/certificate-renewal.libsonnet')

Applying rules and restrictions to variant composition

With the amount of flexibility provided by Jsonnet, we may also want to restrict environment declarations to follow a set of rules. For example, an environment needs a CCM, and an environment can only have one CCM implementation. We can express these restrictions in a simple way using Jsonnet’s lazy evaluation semantics.

{

assert 'ccm' in $ : 'must provide a CCM implementation',

values:: {},

}

+ (import './components/bolina.libsonnet')

{

assert !('ccm' in super) : 'can only have one CCM implementation',

ccm: {

// CCM implementation

},

}

Modifying resources in variants

The inheritance operator is also a perfect fit for modifying configuration from base resources or other variants. In our scenario, the certificate renewal feature consists of a CronJob that keeps a ConfigMap with the certificate updated, so we need to mount the certificate ConfigMap on the bolina container.

{

local values = $.values.certificateRenewal,

values+:: {

certificateRenewal: {

name: 'certificate-renewer',

namespace: 'default',

labels: {

'app.kubernetes.io/name': values.name,

'app.kubernetes.io/component': 'bolina',

'app.kubernetes.io/part-of': 'codavel-cdn',

},

image: 'artifact.codavel.com/bolina-certificate-renewer',

schedule: '0 0 1 * *',

},

},

certificateRenewal: {

container:: {

name: values.name,

image: values.image,

},

cronJob: {

apiVersion: 'batch/v1',

kind: 'CronJob',

metadata: {

name: values.name + '-cronjob',

namespace: values.namespace,

labels: values.labels,

},

spec: {

schedule: values.schedule,

jobTemplate: {

spec: {

backoffLimit: 1,

template: {

spec: {

containers: [ $.certificateRenewal.container ],

restartPolicy: 'Never',

},

},

},

},

},

},

configMap: {

apiVersion: 'v1',

kind: 'ConfigMap',

metadata: {

name: values.name + '-configmap',

namespace: values.namespace,

labels: values.labels,

},

data: {

'full_chain.pem': std.base64(importstr 'secrets/full_chain.pem'),

'priv_key.pem': std.base64(importstr 'secrets/priv_key.pem'),

},

},

},

bolina+: {

container+:: {

volumeMounts+: [

{

name: 'bolina-certificates-volume',

mountPath: '/usr/codavel/certificates/',

},

],

},

deployment+: {

spec+: {

template+: {

spec+: {

volumes+: [

{

name: 'bolina-certificates-volume',

configMap: { name: $.certificateRenewal.configMap.metadata.name` },

},

],

},

},

},

},

},

}

Choosing your own configuration model

Consider what your use-cases are before designing a configuration model and picking a tool. Jsonnet is a powerful tool, but it has a higher learning curve than its alternatives. If you have few configuration variations to manage, then you are probably best served by Helm or Kustomize. I recommend getting a basic understanding of the tools you are taking into consideration so that you can best understand the tradeoffs between them and find out which one better fits your desired workflow.

If you really do need to pack a punch, then hopefully this little example gave you a good understanding of how you can use Jsonnet to manage your Kubernetes configurations. The Jsonnet Training Course provides some good examples and use-cases, following a more structured approach, if you need some more inspiration on how to structure and level up your Jsonnet code. If you are looking for alternatives to Tanka, for applying Jsonnet to your Kubernetes cluster configurations, you can also check out kubecfg or Kapitan. If Jsonnet did not strike a chord with you, then you will be pleased to know that there are other languages with similar goals. Dhall is another configuration language based on JSON, and Cue — a personal favorite — has a very interesting composition model, and provides type checking and inference through a unified concept of values, types, and constraints.

Regardless of your choice, I hope this information helps you unravel the intricacies of configuration management in Kubernetes, and to choose appropriate tools. Once you chart a course and walk the path, the next stop is GitOps. Stay tuned!

.png)