TL;DR Mobile app users are highly impatient, in particular when it comes to video startup time: users start abandoning a video after only 2 seconds of buffering. Optimizations such as using a CDN and adjusting video size are crucial for ensuring a fast video startup, but they are not enough as the wireless last mile makes video startup time highly volatile. The problem relies on the HTTP inability of handling the natural instability of wireless links. At Codavel, we ran our own testing to evaluate HTTP performance and, like many others in the industry, what we see is high and volatile video startup time, with more than 68% of user sessions with a startup time above 2 seconds. I also provide an overview of what Codavel has to offer to solve these challenges.

Why is mobile video streaming still a challenge?

From modern work habits to the way we are consuming entertainment, it’s impossible to ignore the meteoric rise that video streaming services have been going through, especially on mobile devices, where the time spent on the top 5 video streaming apps exploded in growth between 2016 and 2018, with a 140% increase. On top of this growth, users are also more demanding than ever, expecting seamless video streaming experiences and becoming less and less patient by the time they press the play button. Therefore, the so-called video startup time - the time from when the viewer presses play, to when the first frame of the video is displayed - has a tremendous impact on user experience and, consequently, on key business metrics.

Want some proof?

- Conviva’s report shows that video startup time got 11% worse in mobile (9% worse just in Asia), and lost a staggering 5.1B viewing hours due to buffering.

- Akamai found that viewers start to abandon a video if it takes more than 2 seconds to start, and on top of that, for every second increase in delay (in video startup time) is expectable to see abandonment rates jump 5.8% for each successive second

- Snapchat reports an even more dramatic increase in the demand for faster video startup times, witnessing their entire user base moving to the next “snap” or just leaving the app if it takes 2 seconds or more to load.

- Facebook was only recording data on the user’s viewership after they have watched a video for 3 seconds, later finding a significant cut on the number of viewers who dropped from the video before 3 seconds had passed.

In fact, users really value speed, with 74% actually considering it as one of the most important attributes in video streaming. And guess who they blame when their apps are not performing as expected - more than half (55%) hold the app responsible, even if the problem lies on the network. Even worse, 53% end up uninstalling after experiencing performance issues. Given this, it’s no surprise that, according to Verizon, OTT video services delivering average, poor-quality experiences are losing as much as 25% of their revenue.

There are many factors that can impair video performance KPIs like startup time, such as the type of video encodings, the displayed quality or the implemented CDN, but undoubtedly one of the most important ones is network latency.

Network latency has a high impact on the performance of any application, and the problem is that it is very hard to control and predict, as it is strongly dependent on the quality of the network link, which, as we will see, is still an ongoing problem. Although not being a streaming app, Uber perfectly suits our case here, as it’s known as a highly optimized app built on top of a very robust infrastructure. And what they see is not good news - they experience high network response times across the globe, with the scenario being even more challenging in regions like India, Southeast Asia or South America.

Uber - Tail-end latencies across the major cities

This happens because, in contrast with wired networks, wireless networks have unique characteristics and challenges, as being highly susceptible to losses from interference and signal attenuation that result in much higher (e.g: 4-10x) and variable round-trip times (RTTs) and packet loss, when compared to wired counterparts. This results in not only high loading time but also highly variable and inconsistent network response times, being possible to observe impactful variations depending on the carrier, location, or even time of the day.

Uber - Tail-end latencies, variations between carriers, time, and days

It’s not only Uber identifying these problems. Quoting the notorious Akamai study on unlocking mobile applications performance, “Cellular connections, which currently comprise about 38% of all mobile access on the Akamai network, are particularly challenging. They not only are subject to high latency, but also suffer from highly variable congestion rates – even on relatively fast 4G networks – resulting in uneven end-user response times. This makes it difficult for mobile app developers to deliver the consistent, high-performance experience their users want.”

Or even on a post from Twitter Engineering Blog, “in many locations, latency is too high for users to consistently access their Twitter content”.

While the discussion on the topic has become more heated nowadays, the fact is that the problem is not new and still exists, and from investments in cloud infrastructures (e.g. CDN) to all kinds of video optimizations, all the efforts made by the industry have been focusing on delivering the best experience to the increasingly demanding users. But the truth is that there are still some paths to try out.

The one to blame - HTTP is not suited for mobile apps

HTTP was designed in the early 1990s as an application layer protocol that is sent over TCP. More recently in 2015, its second version, HTTP/2 was released, being presented as a faster, more reliable and efficient version of the old HTTP. But when it comes to today’s needs and to the increasing growth and demand for mobile content, even this last version seems to have its struggles. As stated by Fastly, “while HTTP/2 performs better than HTTP/1 in most situations, it isn’t as good when connections are bad — such as a high-loss connection, or one with a bit of loss and a lot of latency”. Uber Engineering Blog, also reinforces this idea - “Our mobile apps require low-latency and highly reliable network communication. Unfortunately, the HTTP/2 stack fares poorly in dynamic, lossy wireless networks.”

You may think “ok, let’s optimize HTTP then”. Well, that doesn’t seem an option, as implies “forcing a “selective penalty” on some users, for improving the content delivery for others”, as stated on Linkedin’s engineering blog. In other words, it can’t be optimized to deliver a faster and consistent video experience for everyone, without destroying the experience for some.

Conducting our own HTTP testing - Codavel

At Codavel, we wanted to observe this phenomenon on our own, so we decided to conduct an analysis of video startup time in a mobile app, for 1700 different user sessions in India.

With no surprises, what we found were signs of big problems: The average video startup time was 5.05s, whereas the median startup time was 2.86s, which means the distribution is skewed towards the right, with a long tail of high video startup times, clearly pointing to a suboptimal user experience.

|

HTTP |

||

|

Average |

5.049s |

|

|

Median |

2.863s |

|

|

p90 |

11.291s |

|

|

p95 |

42.563s |

In fact, while the median video startup time lies below 3s, the 90th-percentile is 11,3s and the 99th-percentile is 42,6s. In other words, from the data we collected, video startup time is not only high, but also very volatile.

HTTP startup times dispersion

|

HTTP |

|

|

|

Std. deviation |

7.600s |

|

|

Interquartile range |

3.366s |

|

|

Mean absolute deviation |

4.093s |

|

|

Median absolute deviation |

2.820s |

Our take on the problem

As we have seen, HTTP is incapable of handling the natural instability of WiFi or 4G links and has been undermining the ability to deliver high-quality video content to millions of users.

The mobile app industry has already identified the need to replace existing HTTP with new protocols prepared to handle the volatility of wireless links. This is why industry leaders like Google, Facebook or Uber have been investing heavily in a new protocol, QUIC, which evolved to HTTP3. However, QUIC brings two major drawbacks: it requires an engineering and operational nightmare to use it, and QUIC performance improvements are somewhat limited. I'll spare you the details here, but in case you're curious, take a look here.

At Codavel, we devote ourselves to this fundamental challenge: building a mobile-first protocol, robust to wireless link instability, that can be used effortlessly by mobile apps. That is what you get with Codavel Performance Service and our groundbreaking protocol, Bolina, that clearly outperforms both HTTP and QUIC / HTTP3.

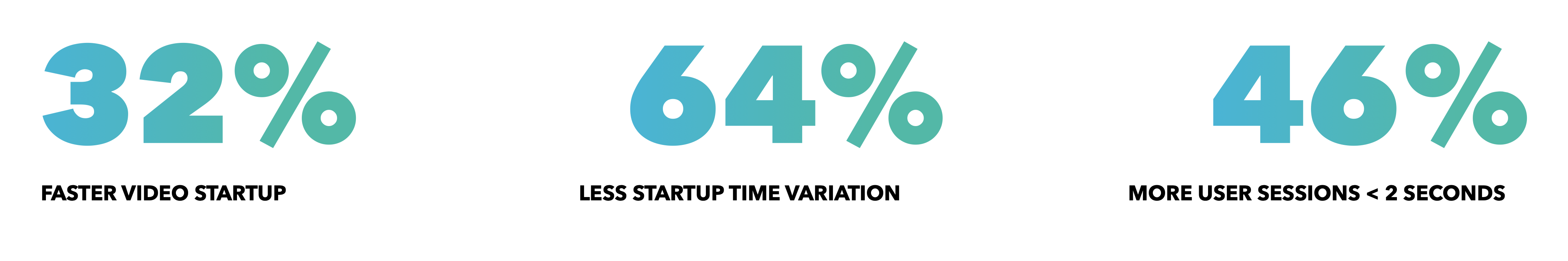

To prove it, we conducted the same tests using the same methodology as in the aforementioned performance results for HTTP, and conducted an analysis on the behavior of Codavel Performance Service, with respect to video startup time for users in India. The obtained results proved two key significant improvements with respect to fully optimized HTTP: higher performance, more stability.

Want to know more about our testing and results?

Download the report here and talk with us,

we can help you achieve the same results!