People tend to associate the idea of performing A/B tests to creating a couple of layouts for a company’s landing page and comparing the conversion rates for each one. While this is an important use case, A/B testing is much more than testing web pages or layouts and it can also be perfectly applied to mobile apps and its features.

Although there are some things to watch out for before starting the tests, I’ll show you some real examples of how testing small changes with real users can have a tremendous impact on the app’s success.

Having feedback directly from your target is a great way to improve their experience and, as a result, your conversion metrics. That’s why companies such as Facebook and Netflix run over thousands of experiments each year.

Why should you start Ab testing

Ab testing consists of creating two variants (let's call them A and B) that should be slightly different between them, test how they behave under similar circumstances and understand which one performs better.

When applied to software, it basically means creating two versions of a product, where typically the first (A) is the latest stable version and the second (B) is the same as A but with a few (more on this later) modified variables. Then, both versions are distributed during the same time to different users, while you’ll be collecting metrics that allow the direct comparison of the performance of each version. If B is considered better than A, then B becomes the new version.

This is a broad concept that can be applied to test pretty much every change in your product. It does not require a lot of effort to set up a basic A/B test, and it allows product owners to evaluate a new feature or change based on real data. By deploying this into only a subset of the users, it reduces the impact of those changes in the scenarios where something goes wrong, such as when B is performing worse than A. If done properly, you should have no doubts whether a specific change should be made to your product and what will you gain from it.

The Angry Birds example

A basic example of what you could test is having different screenshots to promote your app. That’s what Rovio tested before they launched the second version of Angry Birds, by comparing, for example, the impact of having horizontal or vertical images in the app store.

After some iterations, the team decided on which images would be better. That decision (in case you are wondering, amount other changes, they decided to go with the vertical images) led to an increase in conversion of 13%, which represents 2.5M additional installs during the first post-release week.

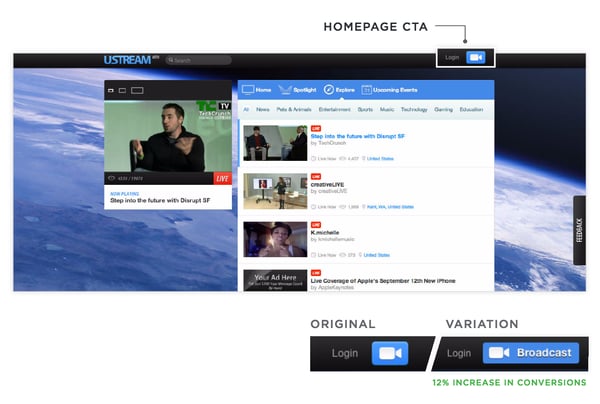

Ustream minor changes

Even small modifications inside the app may have a huge impact on the product, as the A/B test performed by Ustream demonstrates below. In spite of thinking that the camera icon would speak for itself, they made a version in which they added the “Broadcast” label next to the icon. They then tested that small change in a subset of 12.000 users. The conclusion was that having the label was 12% more effective.

(Image Source)

(Image Source)

Going further than layouts

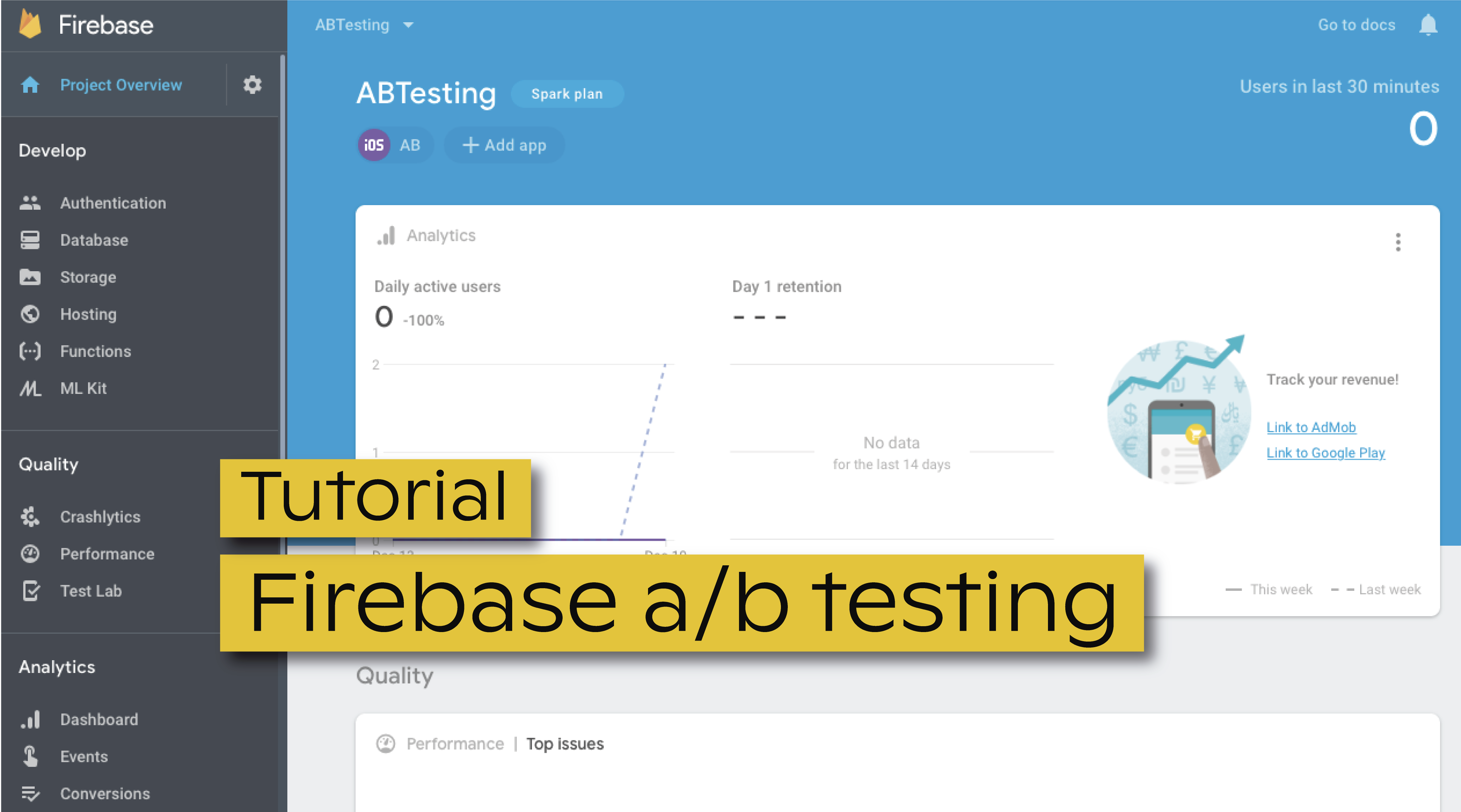

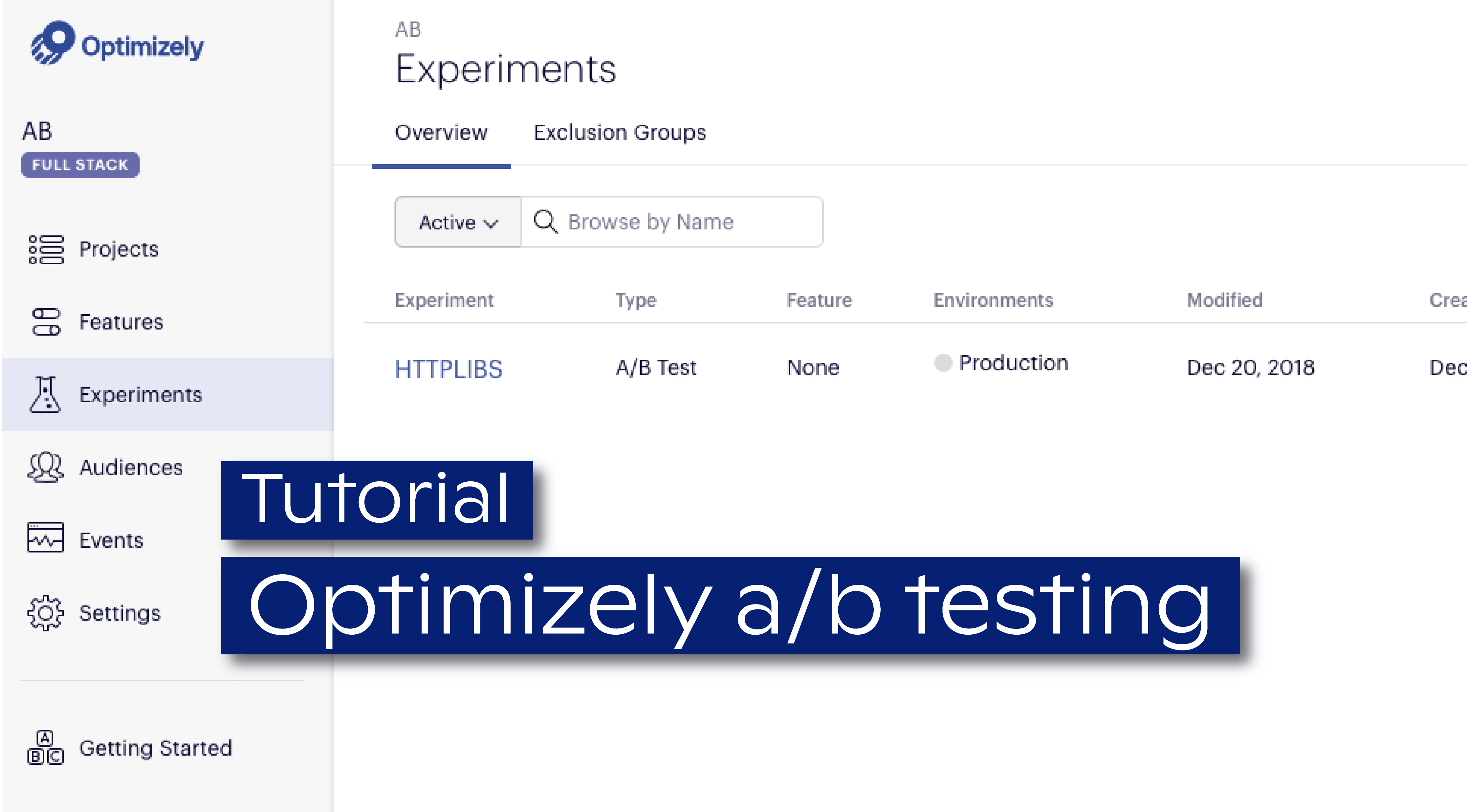

You probably run into many examples of A/B tests, including the ones above, that focus on creating two layout versions of a website or an app. Ab testing goes way beyond that: it can be successfully used to test almost every feature of a mobile app - even architectural changes.

Imagine that you own an app with several thousands of active users that do a lot of transfers from a remote server. You know by now that bad app performance costs you money, so when you run into a new HTTP library that promises incredible performances, you decide to give it a shot. The integration is pretty smooth and the lab results are promising. But is that enough? Do you feel comfortable to deploy the new app to all your user base at once? Probably not.

That’s when A/B testing is the right approach: you randomly select a subset of your users (let’s say 10%) that will use this new app version during a couple of weeks. After the experiment is over you compare the results and you confirm that the performance is indeed better. Great! You can now push this version to all your users or increment the subset of users in a new A/B test until you feel comfortable enough to make it the latest stable version.

Ab testing is not as easy as it seems

By now you are probably feeling excited about Ab testing and ready to start, right? But before leaving you to explore it and play around, here are some tips that will help you in being successful:

- Clearly define your goals: A/B testing does not necessarily mean that the B version only differs in one variable from the A version. However, changing too much will make it impossible to understand which change led to that performance boost or what made it worst. Focus on what you really want to test!

- Which version performed better? One common mistake is to start the A/B test without a clear vision of what you are trying to achieve with the change. One should clearly define beforehand what is the expected impact of the change to be tested and how you are going to measure it. The version that performed better should be the one that took you closer to your goal.

- Try to make fair comparisons: A/B testing is basically statistical inference and, as so, both variants should be evaluated based on a significant subset of your users. This means that both versions should be tested in similar conditions, which, depending on your use case, could mean testing on the same time of the day, on similar platforms/devices, on similar regions, etc.

- Don't make early conclusions: While it might be exciting to start the A/B test and instantly receive good feedback from the new version, decisions should not be made base on a specific user or based on a reduced number of samples. You should collect data during a significant amount of time and for a significant amount of users and only when you feel confident enough about the results (I strongly recommend reading this post about calculating statistical confidence) you should start the analysis to decide which version to choose.

- Don’t build your own tool: Setting up an A/B test can evolve a lot of effort (and time!) if you are building your own tool from scratch. However, there are a lot of available Ab testing frameworks that you choose from that will probably satisfy your needs. In case you are wondering which ones do I recommend... we'll have news on that soon!

Most importantly, remember that Ab testing is not just about the testing itself, but also about learning. You should understand the results and why your users favored one version.

Good luck and happy (A/B) testing!