It wasn’t that long ago that the Internet was a repository of information. We would mostly access static content from web pages with a small amount of traffic and serving just a few local regions. But that reality quickly changed.

Today, the Internet is a true expressway to a myriad of services that consume a lot more data and are intended to serve users worldwide (e.g. e-commerce, video streaming, data backup…) (source). Content is now a big part of our daily lives. Thus, we want to access it immediately and we have very little patience for delays. 49% of users expect apps to respond in 2 seconds or less and will evaluate an app or web page performance by the time it takes to respond.

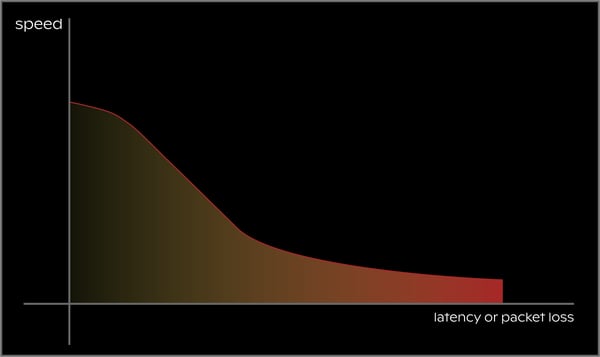

But not everything is perfect in this new world: speed is deeply affected by link instability (packet loss) and distance (latency), which have been increasing with the boom of the Internet. And Internet protocols were not originally designed to deal with these issues.

But not everything is perfect in this new world: speed is deeply affected by link instability (packet loss) and distance (latency), which have been increasing with the boom of the Internet. And Internet protocols were not originally designed to deal with these issues.

Caching as a solution to all problems

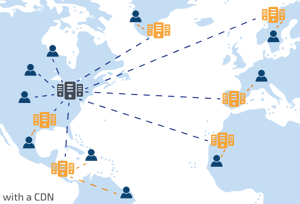

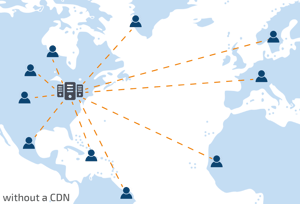

With users located all over the world, content doesn’t reach everyone at the same pace. The further away the end-user is from the content, the more he suffers from overall load time and bad experience.

The Internet was urging for a solution to ensure a good user experience no matter the distance, and an obvious solution came up: place the content closer to users. But how do you do it, if you have users all over the world? You place servers in as many locations as possible and fill them with copies of the original content. This way, you reduce the distance between the content and the user - and consequently, increase the speed. This approach is known as Caching.

When you access a popular photo or video, for example, you’re probably retrieving this content from the nearest cache (a temporary storage area), rather than directly from the original server. This saves you time and saves the core network the burden of additional traffic.

Making caching happen: CDNs

Content Delivery Networks (CDNs) first appeared in the 90s, deploying the infrastructure that supports a caching-based content delivery. They are the ones responsible for placing the replicas of the most frequently accessed content near the end-users (at the “edge” of the network), through a network of edge servers.

Content Delivery Networks (CDNs) first appeared in the 90s, deploying the infrastructure that supports a caching-based content delivery. They are the ones responsible for placing the replicas of the most frequently accessed content near the end-users (at the “edge” of the network), through a network of edge servers.

The location of a group of servers is referred to as a PoP (Point of Presence). Each CDN PoP serves the users in the geographic area that it’s placed. Using a practical example: if you would have a US-hosted website and someone accesses it from Bangalore, that connection would be done through a PoP in India. That way, content doesn’t need to travel all over half of the world and back.

The benefits of CDNs

This is the reason why reducing load times is the most common benefit associated with CDNs, but there are a few more. Considering that it depends a lot on which CDN we are talking about, we'll be introducing some of them based on this Cloudflare’s list:

- CDNs allow you to reduce bandwidth costs. Bandwidth consumption costs is a primary expense for websites. Through caching and other optimizations, CDNs are able to reduce the amount of data an origin server must provide, thus reducing hosting costs for website owners.

- CDNs increase content availability and redundancy. There are many reasons that can make a website go down, such as large amounts of traffic or hardware failure. Since CDNs have a distributed nature, it can handle more traffic and withstand hardware failure better than many origin servers.

- CDNs help in improving website security. A CDN may improve security by providing DDoS mitigation, improvements to security certificates, and other optimizations.

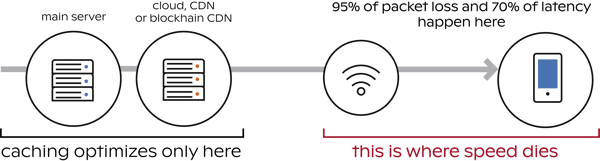

By now, we could think that CDNs solved all our speed problems. The reality is that there are still a few issues that cannot be solved by caching-based solutions. That’s the case of dynamic content, which is generated on-the-fly (e.g. uploads, API calls or real-time data).

As an example, on Facebook alone, more than 350 Million photos are uploaded every day. The problem is that this type of content is not cachable. When you need to access dynamic content, caching doesn’t help at all. And surprisingly, the same applies for wireless links.

Caching in a wireless world

We’re moving from a wired to a wireless world. By 2021, 63% of total IP traffic will be from WiFi or mobile devices (source). But remember when I told you that Internet protocols were not designed to deal with the “new” Internet challenges? Well, the same applies here: they’re not designed to cope with the challenges of wireless communication, in particular with natural instability of wireless links. In addition, caching helps you by optimizing the link between the content server and the network edge. But what happens when the problem is actually on the last mile?

With 95% of packet loss and 70% of latency happening in the wireless last mile, caching is definitely not much of a help for wireless communication. It is time for a similar revolution (as Caching once was), to keep up with the new Internet demands. It is urgent a new approach to optimize Content Delivery as a whole: end-to-end.

With 95% of packet loss and 70% of latency happening in the wireless last mile, caching is definitely not much of a help for wireless communication. It is time for a similar revolution (as Caching once was), to keep up with the new Internet demands. It is urgent a new approach to optimize Content Delivery as a whole: end-to-end.